Recognition and Classification of Human Emotions From Facial Expressions

AI and Human Emotions

Motivation

Facial emotion recognition (FER) is a key field with applications in healthcare, human interactions, and human-machine interactions. It plays a vital role in predicting psychological states during social interactions. Machines that recognize emotions from facial expressions have great potential for improving user and customer satisfaction by understanding mental states. Although emotion recognition from speech is also important, facial expressions are often the first indicators of emotion, especially when verbal cues are absent. With communication being largely visual (55%), vision-based FER techniques are essential for identifying emotions.

Proposed Algorithm and Model Implementation

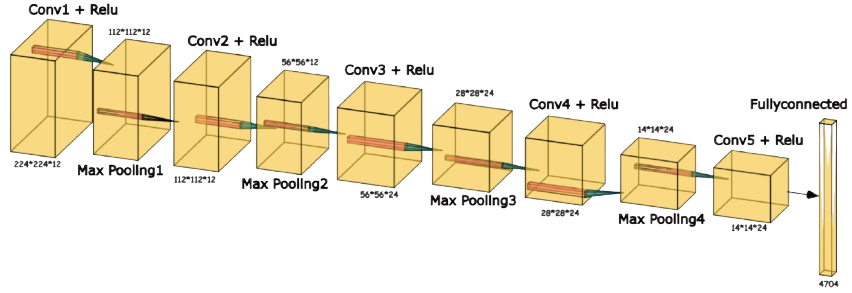

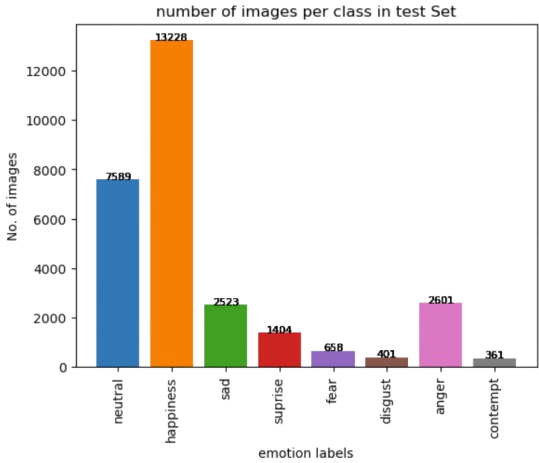

This project introduces a facial expression classification algorithm that utilizes a shallow neural network architecture to recognize and categorize human emotions. The system leverages images from the AffectNet dataset to classify eight primary emotions: neutral, happiness, sadness, surprise, fear, disgust, anger, and contempt. The whole dataset is batchlized to prevent memory overshoot. At each iteration of batches, the model is trained through forward-pass and backpropagation via Mean Cross Entropy loss function. Labels are predicted on a given image with argmax. After training the model on AffectNet, the algorithm is implemented in real-time for emotion classification, in conjunction with the OpenCV facial detection algorithm. We also compare the performance of this algorithm with both a baseline neural network and a pre-trained ResNet18 model.

Baseline CNN with 5 Convolutional layer & Fully Connected Layer.

Dataset

In this work, we used a benchmark dataset: Affectnet to train our convolutional neural network.

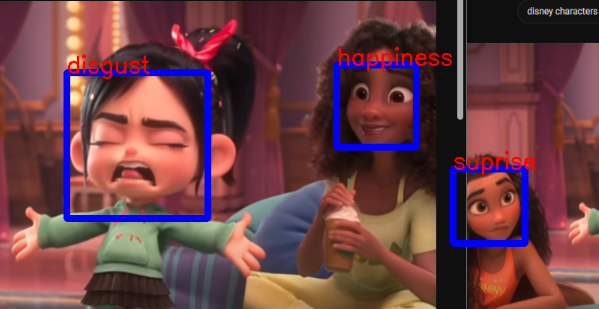

Live Demo

The video below demonstrates the real-time emotion recognition system in action.